Domain Experts' Interpretations of Assessment Bias in a Scaled, Online Computer Science Curriculum

Abstract

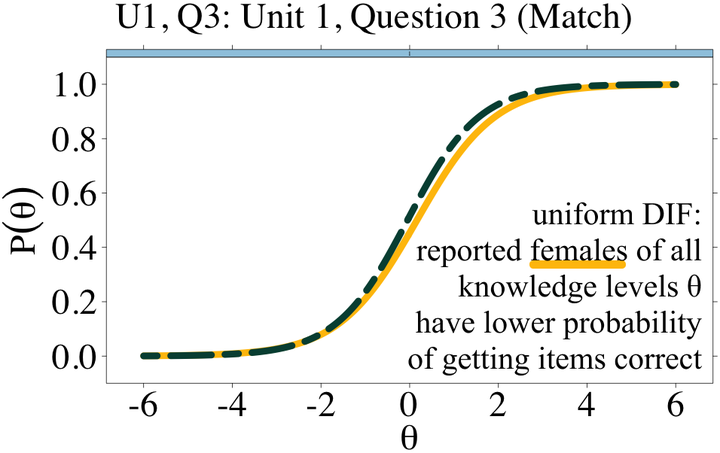

Understanding inequity at scale is necessary for designing equitable online learning experiences, but also difficult. Statistical techniques like differential item functioning (DIF) can help identify whether items/questions in an assessment exhibit potential bias by disadvantaging certain groups (e.g. whether item disadvantages woman vs man of equivalent knowledge). While testing companies typically use DIF to identify items to remove, we explored how domain-experts such as curriculum designers could use DIF to better understand how to design instructional materials to better serve students from diverse groups. Using Code.org’s online Computer Science Discoveries (CSD) curriculum, we analyzed 139,097 responses from 19,617 students to identify DIF by gender and race in assessment items (e.g. multiple choice questions). Of the 17 items, we identified six that disadvantaged students who reported as female when compared to students who reported as non-binary or male. We also identified that most (13) items disadvantaged AHNP (African/Black, Hispanic/Latinx, Native American/Alaskan Native, Pacific Islander) students compared to WA (white, Asian) students. We then conducted a workshop and interviews with seven curriculum designers and found that they interpreted item bias relative to an intersection of item features and student identity, the broader curriculum, and differing uses for assessments. We interpreted these findings in the broader context of using data on assessment bias to inform domain-experts’ efforts to design more equitable learning experiences.

Code.org is a non-profit organization that engages a global community of computer science teachers and learners. Core to this mission is ensuring that its learning materials are inclusive to the diverse subpopulations that rely on them to teach and learn computing. And while careful design of learning materials is important in ensuring equitable outcomes, instructional designers must constantly iterate and improve upon these materials. To inform this iteration process, I conducted a psychometric analysis of learner response data to identify, validate, and address equity issues within the CS Discoveries curriculum.

To understand how equitable learning outcomes are and improve them, I used methods from the fields of psychometrics, learning sciences, and human-computer interaction. This process involved research-practice partnerships in which we consider how end-users (e.g. curriculum designers) interpreted and used assessment scores, analyzed data from learner responses to identify potentially problematic patterns, and considered equitable change.

Impact Code.org made changes to make their CS Discoveries curriculum used by 150,000+ students/year less biased.

This work was in collaboration with Code.org and the UW College of Education and was funded by an NSF INTERN award.